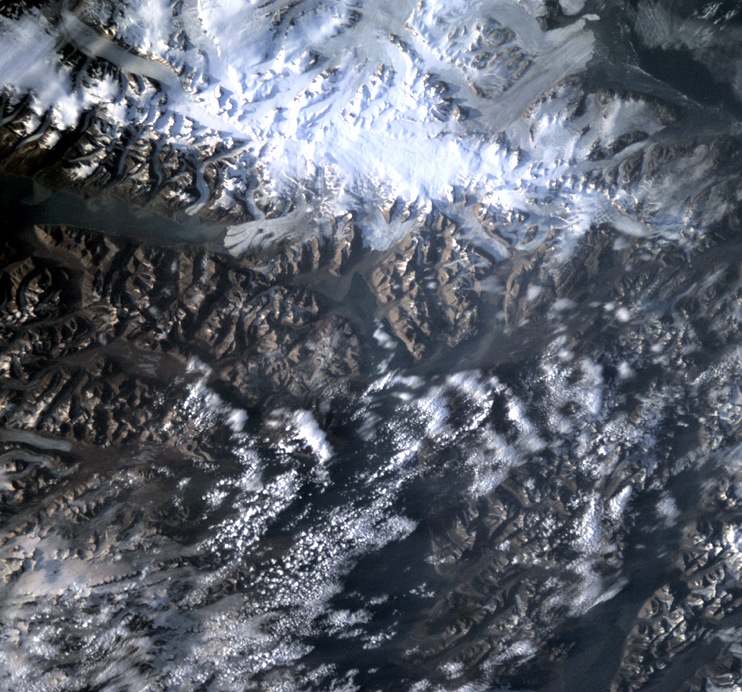

For decades Earth observation satellites have been monitoring the ever-changing home planet; the next step is to enable them to recognize what they see.

The latest public challenge for the machine learning community from ESA’s Advanced Concepts Team is to train satellite software to identify features within the images it acquires — with the winning team getting the unique opportunity to load their solution to ESA’s OPS-SAT nanosatellite and test it in orbit.

“A satellite that learns to interpret its data would be much more autonomous and efficient,” comments ACT scientific crowd-sourcing expert Marcus Märtens. “It will be able to make decisions without having to continuously rely on human oversight — knowing for instance that images of rivers or agricultural fields should be downlinked to the ground, but others can be safely dumped to conserve scarce onboard memory.”

Edge computing expert Gabriele Meoni of ESA’s Ф-lab at ESRIN, focused on Earth Observation, which has developed this challenge jointly with the ACT – explains, “ESA’s AI-equipped Ф-sat-1, aboard the Federated Satellite Systems (FSSCat) CubeSat, has already demonstrated the benefits of AI on-board – it is able to detect images filled with cloud cover and set these aside.

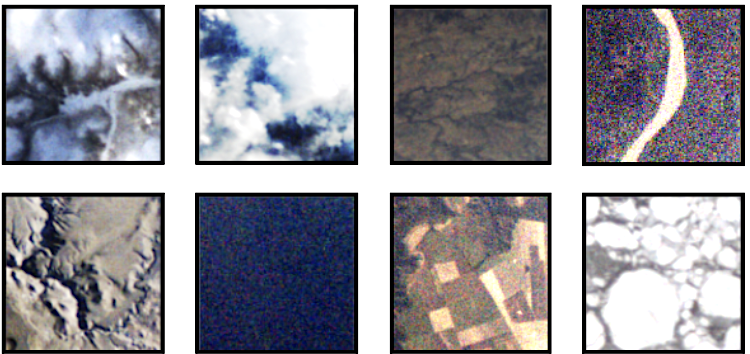

“With our new ‘OPS-SAT case’ competition, we seek to take this approach further. Participating teams receive 26 full-sized images acquired by the OPS-SAT CubeSat, which include small 200×200-pixel crops or ‘tiles’ identified with one of eight different classifications – Snow, Cloud, Natural, River, Mountain, Water, Agricultural, or Ice – with a total of ten examples of each type, representing a baseline for feature identification.”

Dario Izzo, heading the ACT, says, “This challenge is an example of AI ‘few-shot learning’. As humans we don’t have to see a lot of cats to learn what is or isn’t a cat, just a few glimpses will be enough. What is needed for future space missions is an AI system that can form a concept from only limited examples given. This is a very challenging and modern problem from the AI point of view, and there is no commonly recognized way of achieving this.”

Gabriele comments, “What the teams have to ed satellite-sized neural network to accurately identify a further set of image tiles we have prepared from the images. The challenge is to achieve this with the relatively sparse set of examples we give them. The preferred route in machine learning is to use copious amounts of data – meaning thousands or even millions of images in practice – to train neural networks.”

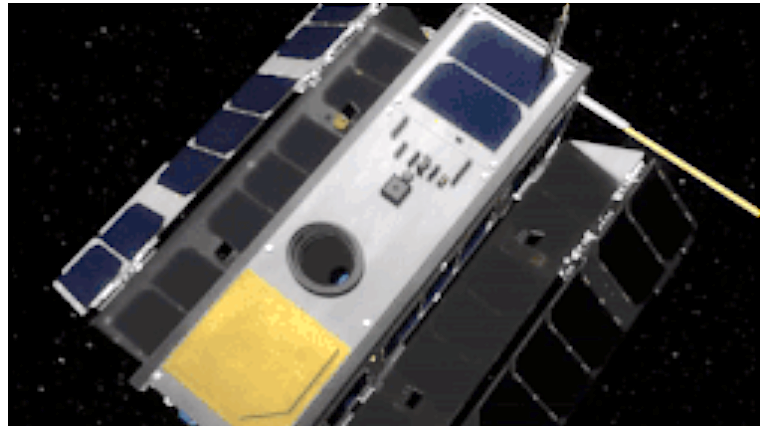

The challenge is based around ESA’s OPS-SAT mission, launched in 2019 as a flying software laboratory that is also a working Earth observation satellite. Despite being smaller than a shoebox, at just 30x10x10 cm in size, this CubeSat-class mission hosts an experimental computer that is 10 times more powerful than any of the ESA missions before it. The dataset the teams receive will be raw, unprocessed images from OPS-SAT’s imager, including the annotated tiles.

This is the latest in a series of public competitions aimed at the AI and machine learning community and hosted on the ACT’s Kelvins website.

Dario explains, “This is different from what has come before because it is a deliberately data-centric challenge. The teams do not have to develop a software model, because this comes already in the form of the neural network aboard the satellite. What they have to do is devise a way to train this neural network so it can learn to classify the image tiles in an effective manner. They have to ask themselves: how can we present the data so that the neural network will adapt in the best possible way?”

Marcus adds, “There are lots of constraints to the challenge, fitting with the idea of operating in space, and moving the decision-making process out to the edge, into orbit aboard the satellite, as much as possible. It’s a very experimental and risky challenge, with lots of room for interpretation, but we think it is possible and look forward to seeing what we get back.”

For more information on the challenge and how to take part, click here. The competition commences on Friday, July 1.